Explore the essentials of data privacy in AI customer service. From GDPR compliance to encryption and data minimization, learn how to protect sensitive information in the age of AI.

.png)

Data privacy in AI customer service is a trust issue that can make or break customer relationships. When someone shares their account details, payment information, or personal problems with an AI system, they're placing enormous faith in that company's ability to protect their information. Understanding how AI handles this data, the associated risks, and how businesses can protect customer privacy has never been more important.

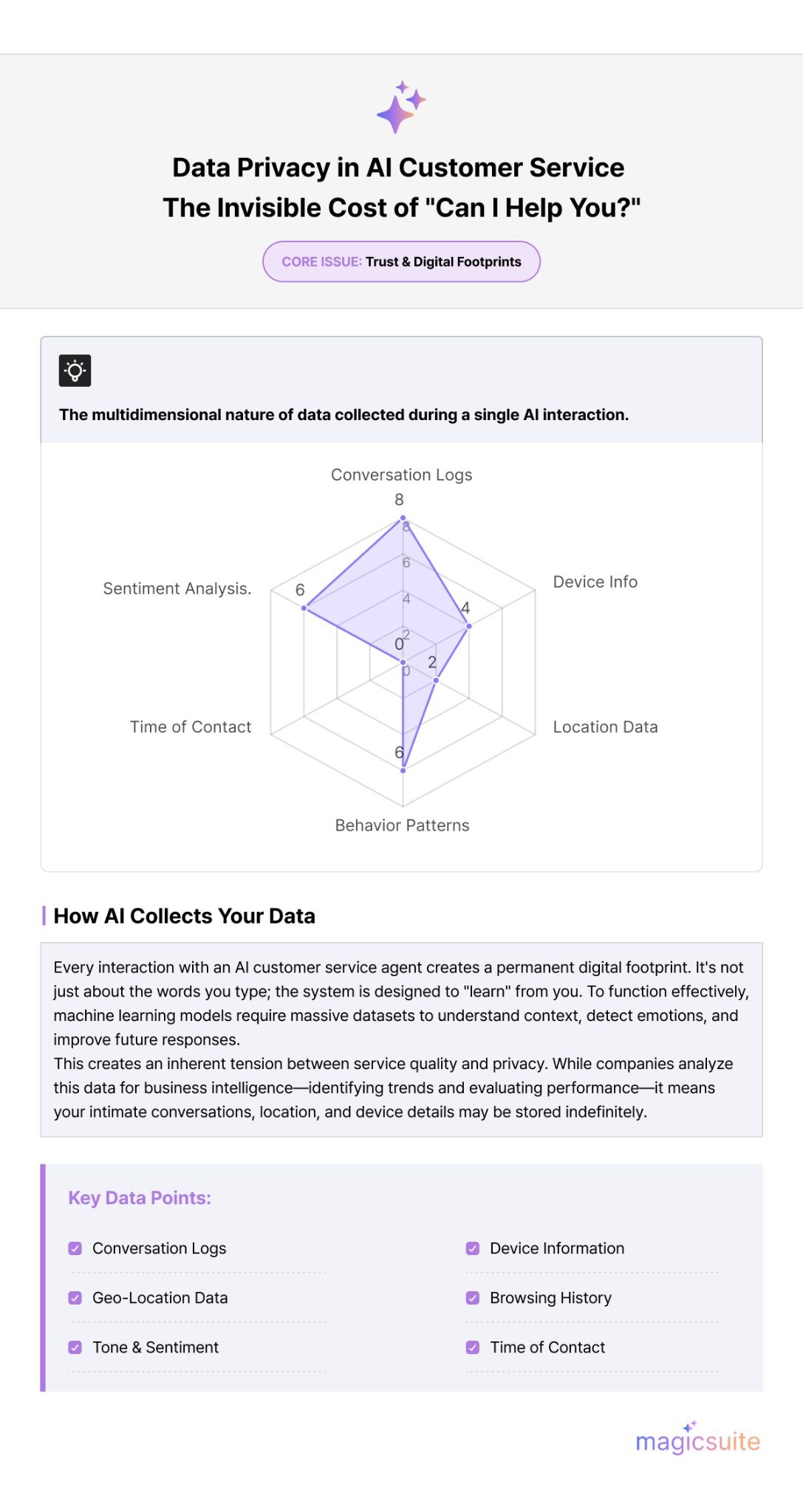

Every interaction with an AI customer service system creates a digital footprint. When you type a message to a chatbot or speak to a virtual assistant, the system captures far more than just your words. It records the time of contact, your device information, location data, browsing history, and even patterns in your communication.

AI systems need this data to function effectively. Machine learning models analyze past conversations to improve future responses. They learn from millions of interactions to understand context, detect emotions, and provide relevant solutions. This training process requires massive amounts of data, creating an inherent tension between service quality and privacy protection.

Companies also use conversation data for business intelligence. They analyze trends, identify common problems, and evaluate system performance. While this helps improve services, it means your private conversations might be reviewed, categorized, and stored indefinitely unless proper safeguards are in place.

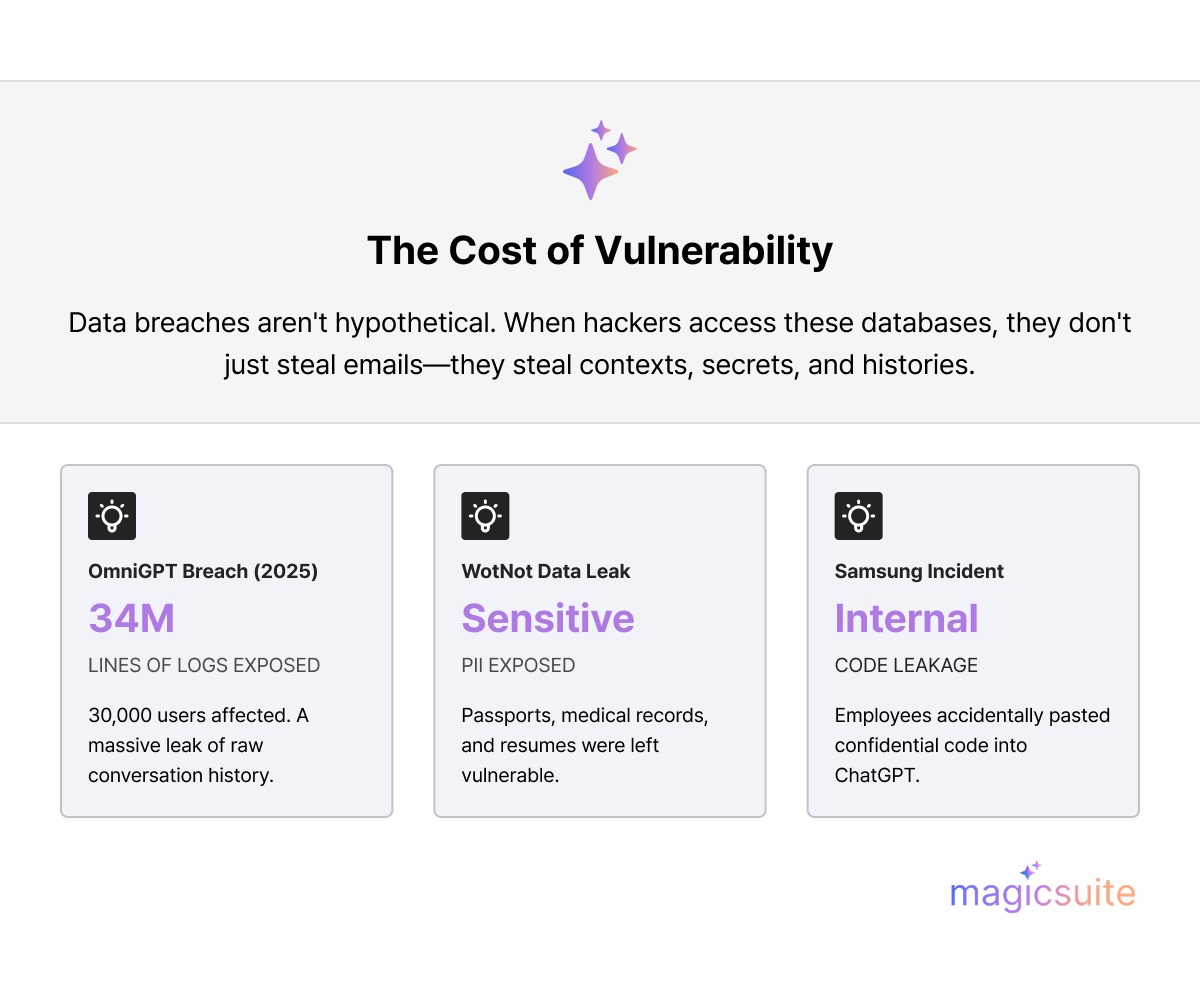

Data breaches represent the most obvious threat in Data Compliance and Privacy. When hackers access customer service databases, they don't just steal names and email addresses—they gain access to detailed conversation histories that might reveal financial information, health concerns, family situations, or business secrets. A single breach can expose years of intimate customer interactions.

Unauthorized data sharing poses another significant risk. Some companies sell or share customer data with third parties for marketing purposes. Even when technically legal under terms of service, customers often don't realize their AI conversations might be used to build advertising profiles or sold to data brokers.

Here are real-world examples of data breaches:

AI systems themselves can create privacy vulnerabilities. These models sometimes retain specific details from the training data, potentially exposing another customer's information when responding to a query. This phenomenon, called data leakage, happens when AI models memorize rather than generalize from their training examples.

Employee access presents a human factor risk. Customer service representatives and technical staff often have broad access to conversation logs. Without strict controls, this creates opportunities for unauthorized viewing, data theft, or misuse of personal information.

Also check: Meta Takes AI Chatbot Beyond Social Media, Privacy Questions Follow

Governments worldwide are implementing strict data privacy regulations that directly impact AI customer service. The General Data Protection Regulation in Europe sets high standards for data collection, requiring explicit consent, clear privacy notices, and giving customers the right to access or delete their data. The California Consumer Privacy Act and similar state laws in America grant consumers new rights over their personal information. These regulations require businesses to disclose what data they collect, allow customers to opt out of data sales, and impose significant penalties for violations.

Industry-specific regulations add another layer. Healthcare providers using AI must comply with HIPAA, financial institutions must comply with regulations on sensitive financial data, and companies serving children must follow strict protection rules. Each industry carries unique privacy requirements that AI systems must respect.

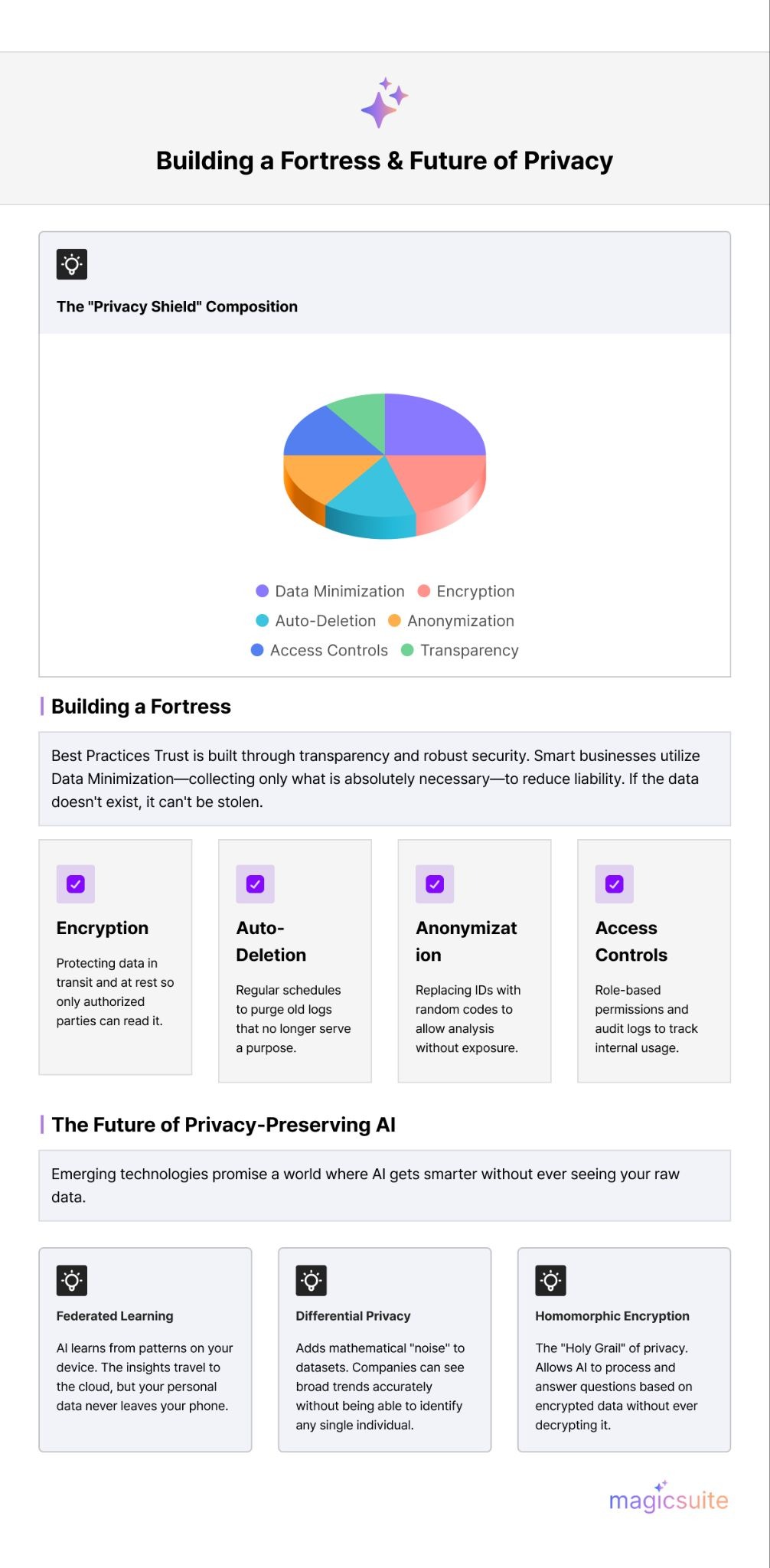

Smart businesses implement data minimization strategies. Instead of collecting everything possible, they gather only the information necessary for specific purposes. This reduces both storage costs and privacy risks. When data doesn't exist, it can't be stolen or misused.

Encryption protects data both in transit and at rest. Strong encryption means that even if attackers access databases, they cannot read the actual content without decryption keys. End-to-end encryption ensures that only the customer and authorized systems can access the contents of the conversation.

Regular data deletion policies help minimize exposure. Rather than storing conversation logs forever, companies should implement automatic deletion schedules. Removing old data that no longer serves a business purpose reduces the potential impact of breaches.

Anonymization and pseudonymization techniques allow companies to analyze trends without exposing individual identities. By replacing identifying information with random codes, businesses can improve AI systems while protecting customer privacy.

Access controls limit who can view customer data. Role-based permissions ensure employees only access information necessary for their jobs. Detailed audit logs track who accessed what data and when, creating accountability and deterring misuse. Transparency builds trust. Clear privacy policies written in plain language help customers understand how their data will be used. Opt-in consent for data collection, rather than buried opt-out clauses, demonstrates respect for customer autonomy.

Emerging technologies promise better privacy protection without sacrificing service quality. Federated learning allows AI models to improve by learning from data patterns across many devices without that data ever leaving customer devices. The AI gets smarter while personal information stays private.

Differential privacy adds mathematical noise to datasets, allowing accurate trend analysis while preventing the identification of specific individuals. This technique enables companies to improve services using collective data while protecting individual privacy. Homomorphic encryption represents a breakthrough that enables computations on encrypted data without first decrypting it. AI systems could analyze your question and provide answers without ever seeing your actual words in readable form.

Customers should actively protect their privacy when using AI customer service through the following:

Data privacy in AI customer service represents a shared responsibility. Companies must implement robust protections and transparent policies. Regulators need to create clear, enforceable standards. Customers should stay informed and make conscious choices about data sharing.

The future of customer service depends on getting this balance right. AI offers tremendous benefits in speed, availability, and personalization. But these advantages mean nothing if customers don't trust systems with their personal information. By prioritizing privacy alongside innovation, businesses can build AI customer service that respects human dignity while delivering exceptional experiences.

Follow MagicSuite.ai Now Get daily updates on AI developments, model releases, industry analysis, and practical AI applications delivered straight to your feed.

Luke is a technical market researcher with a deep passion for analyzing emerging technologies and their market impact. With a keen eye for data and trends, Luke provides valuable insights that help shape strategic decisions and product innovations. His expertise lies in evaluating industry developments and uncovering key opportunities in the ever-evolving tech landscape.