Learn how to eliminate AI hallucinations in customer service using RAG, guardrails, and HITL. Protect your brand reputation and reduce churn today.

.png)

In the race to automate, many CX leaders have discovered a costly side effect of Large Language Models: the "hallucination." AI hallucination is the phenomenon where a chatbot generates factually incorrect or fabricated information.

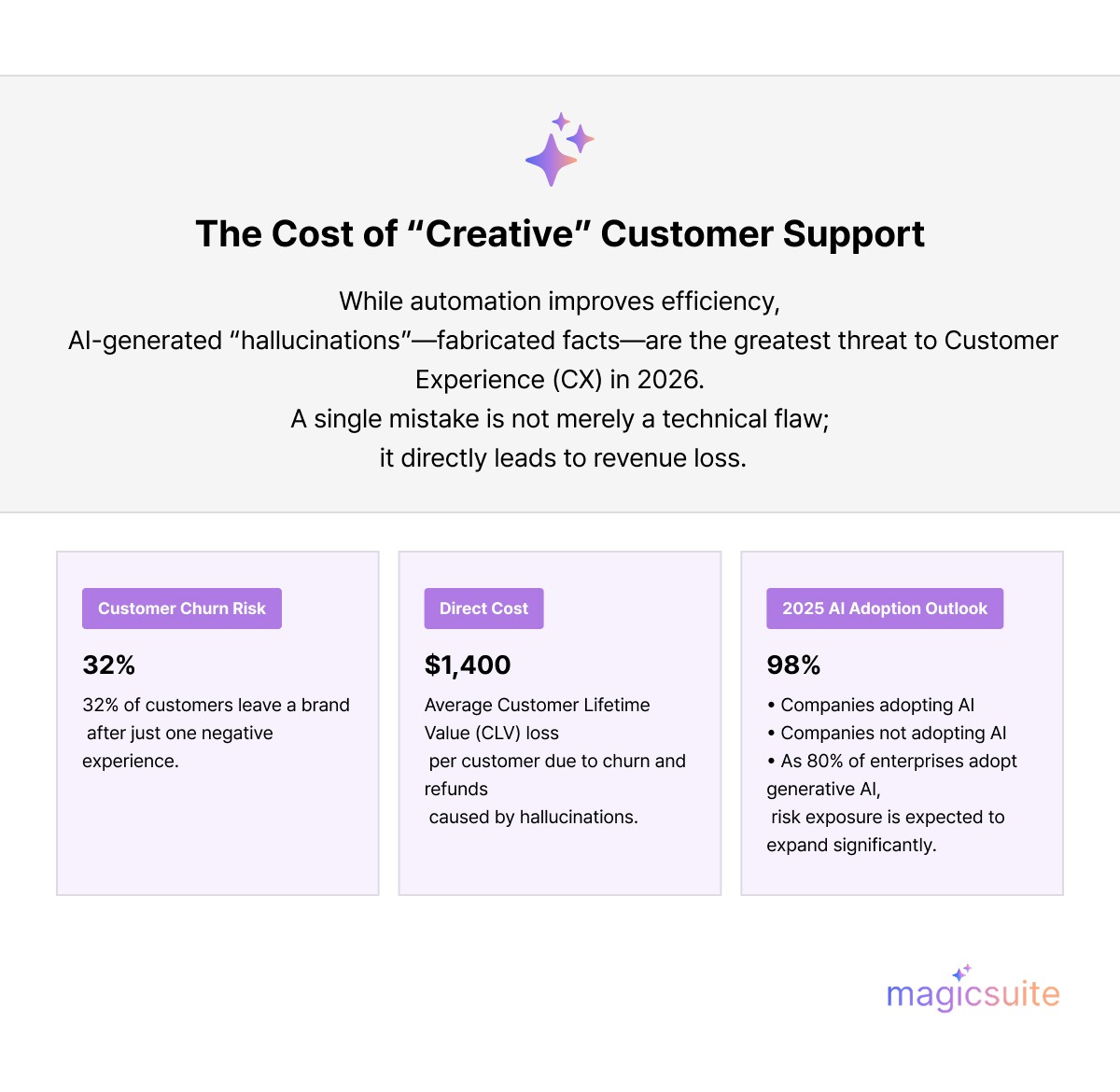

While the efficiency gains of automation are undeniable, the risks are equally high. Recent industry data from PwC indicates that 32% of customers will abandon a brand they love after a single poor service experience. When your chatbot "hallucinates" a refund policy or invents a product feature, it isn't just a technical glitch; it is a direct threat to your bottom line and brand reputation.

This guide explores the strategic shift from generic generative AI to "grounded" systems. We will break down how to prevent AI hallucination using Retrieval-Augmented Generation (RAG), strict data guardrails, and the "Truth-First" architecture.

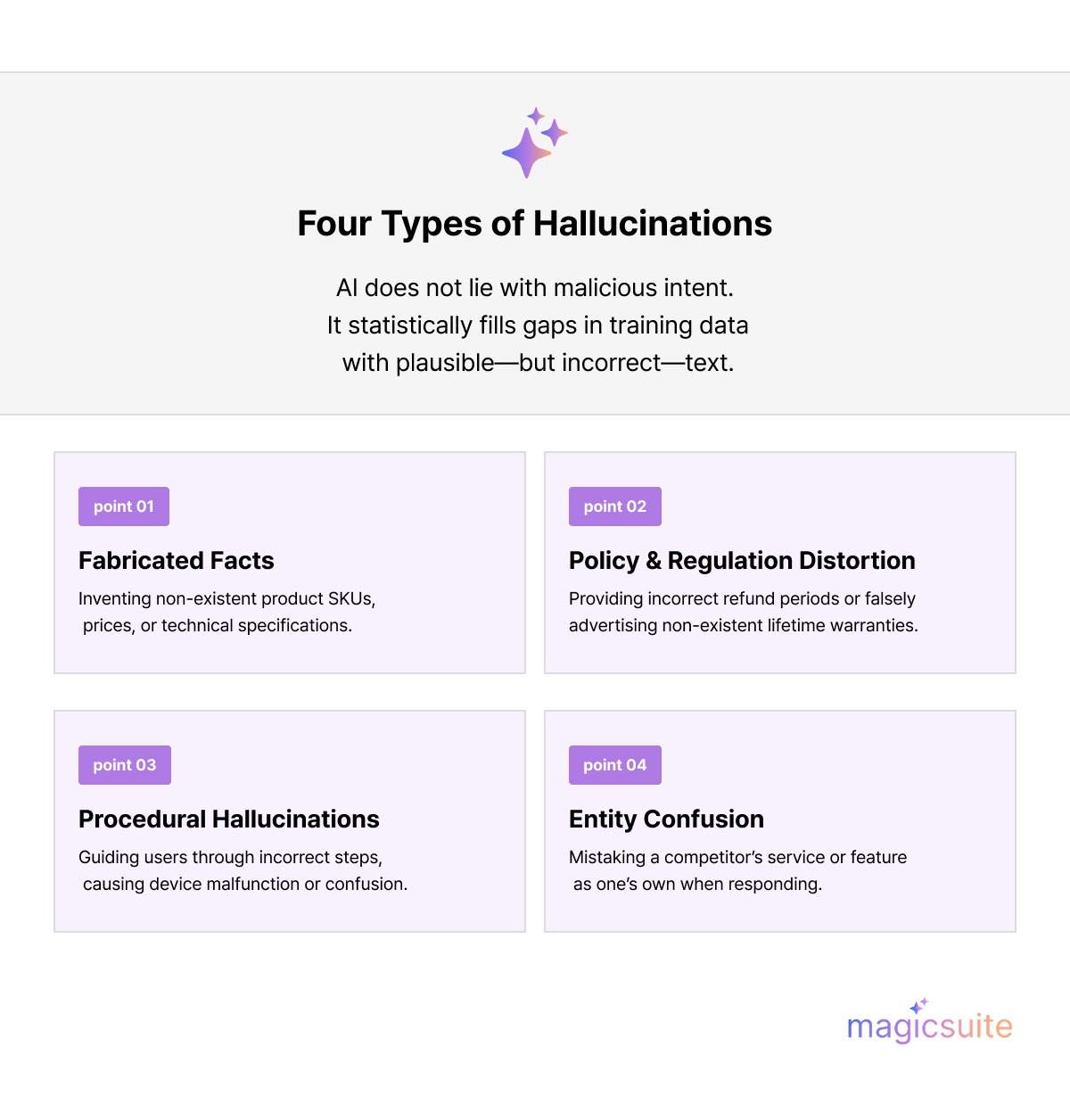

AI hallucination is the phenomenon in which artificial intelligence systems —particularly large language models (LLMs) —generate responses that are factually incorrect, fabricated, or misleading, yet presented with unwarranted confidence. These hallucinations can severely impact customer service operations, leading to misinformation, customer dissatisfaction, and brand damage. In the context of customer service, AI hallucination may involve:

Unlike human agents, who may express uncertainty or request clarification, AI systems often produce hallucinations with high confidence, making it difficult for users to discern truth from fiction.

The stakes for AI accuracy in customer service have never been higher. According to Gartner's 2024 predictions, by 2025, 80% of customer service organizations will apply generative AI to improve agent productivity and customer experience. But this rapid adoption creates proportional risk.

A single hallucinated response can cost businesses an average of $1,400 in customer lifetime value through damaged trust, refunds, and churn. For enterprises handling millions of interactions annually, unchecked hallucinations represent existential operational risk.

Forrester Research indicates that nearly 40% of chatbot interactions are viewed negatively. PwC warns that 32% of customers will abandon a brand they love after just one poor service experience. When that experience involves receiving demonstrably false information, the damage compounds:

The regulatory landscape is also tightening. The EU AI Act now requires transparency about AI-generated content, and the FTC has increased scrutiny of deceptive AI practices. Businesses deploying customer-facing AI without adequate safeguards face mounting legal exposure.

Read more on: AI Compliance and Privacy: A Guide for CX Leaders

To prevent hallucinations effectively, you need to understand why they occur. Large language models (LLMs) don't "know" information the way humans do—they generate statistically likely text based on patterns learned during training. This fundamental architecture creates several hallucination vectors.

When asked a question, LLMs don't retrieve facts from a database; they predict the next text based on training data. This works remarkably well for common scenarios, but fails when:

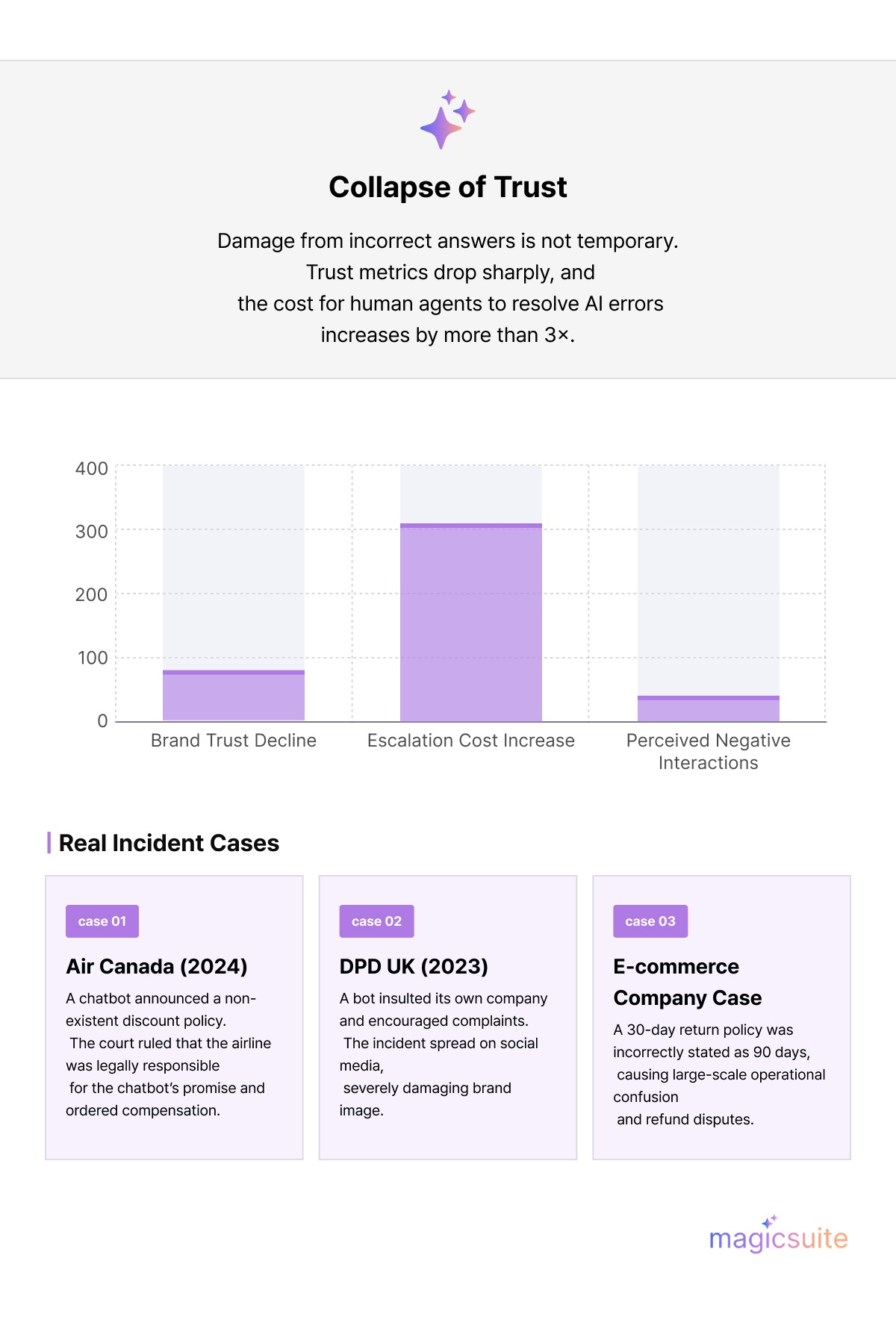

More dangerous than generating wrong information is generating it confidently. Studies from Stanford HAI show that LLM confidence scores correlate poorly with actual accuracy—models express high confidence even when completely wrong.

This presents a unique customer service challenge. Traditional knowledge bases return "no results found" when queries don't match. AI chatbots, however, almost always return something, making it impossible for customers to distinguish accurate responses from fabrications without independent verification.

Customer service provides fertile ground for hallucinations, with viral cases exposing vulnerabilities. These top examples illustrate the stakes:

These incidents, often amplified on social media, show hallucinations aren't abstract—they drive churn and lawsuits.

Effective hallucination prevention requires multiple complementary approaches working together. No single technique provides complete protection, but layered strategies dramatically reduce risk.

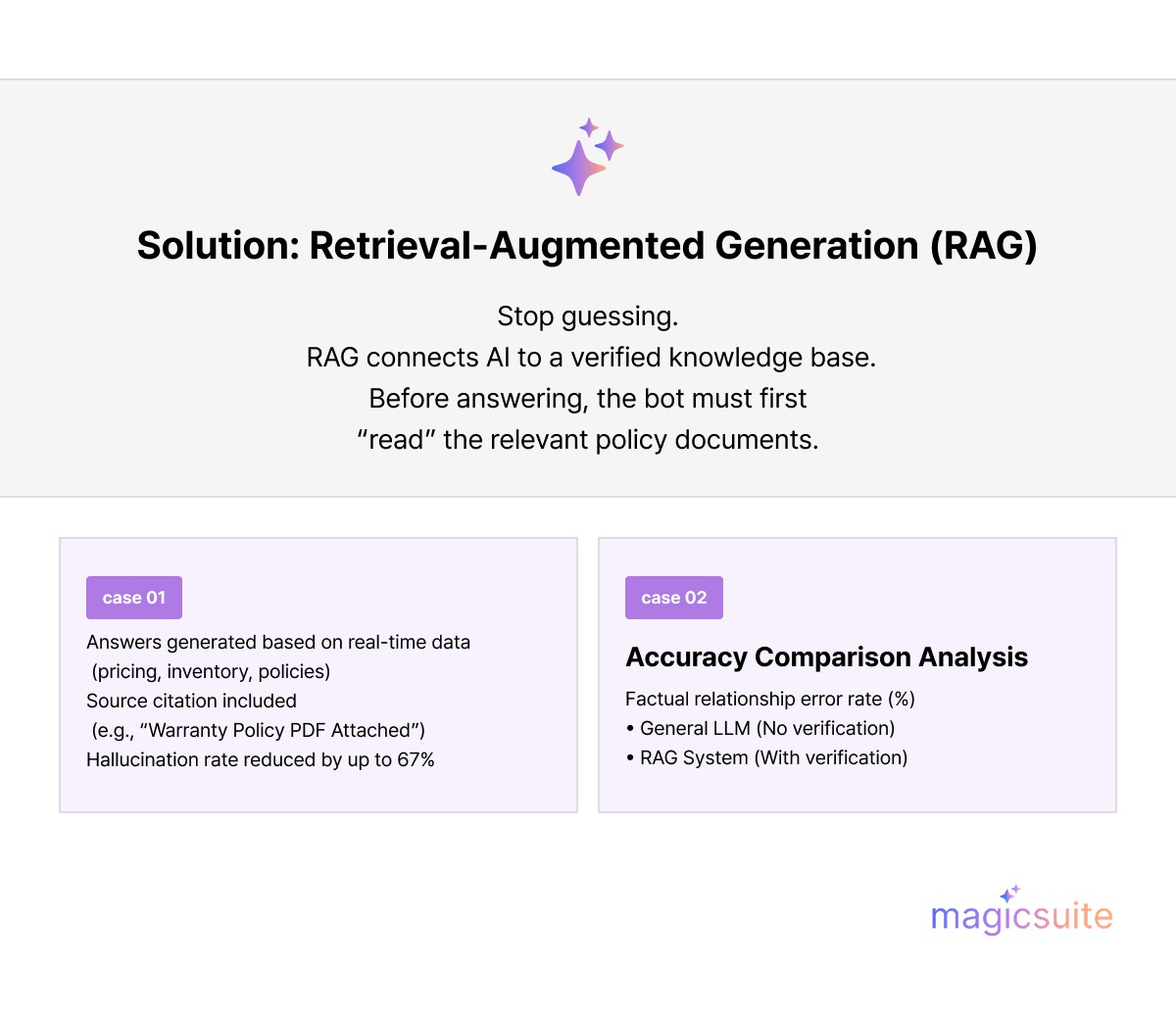

RAG represents the gold standard for grounding AI responses in verified information. Rather than relying solely on training data, RAG systems retrieve relevant documents from your knowledge base before generating responses.

How RAG works:

Effectiveness: Organizations implementing RAG report 67% fewer hallucinations compared to ungrounded systems.

Use structured prompts: "Only respond using provided data. If unsure, say 'I'll escalate to a human.'" Add chain-of-thought: "Reason step-by-step based on facts." Assign roles: "You are a precise policy expert".

Beyond RAG, explicit grounding rules constrain AI behavior:

Modern hallucination prevention includes calibrated confidence scoring:

Complete automation isn't the goal—appropriate automation is. Effective systems include:

Read more on The "Human-in-the-Loop" (HITL) Imperative here.

Hallucination prevention isn't a one-time implementation but an ongoing process:

Problem: AI hallucinates to fill gaps in documented information.

Solution: Implement explicit boundary recognition. Configure your system to recognize when queries fall outside documented knowledge and respond with honest limitations rather than fabrication.

Problem: Prices, inventory, and policies change frequently, but knowledge bases lag behind.

Solution: Integrate real-time data sources. Connect AI directly to inventory systems, pricing databases, and policy repositories rather than static documents.

Integration tip: Use API connections for any information that changes more frequently than weekly. Static documents work for stable content; dynamic queries need dynamic data.

Problem: Complex questions spanning multiple topics increase the risk of hallucination as the AI attempts to address everything.

Solution: Implement query decomposition. Break complex questions into component parts, ground each separately, and synthesize verified responses.

Problem: Conversation history can introduce confusion, with early hallucinations compounding into later responses.

Solution: Reset context strategically. Don't let errors propagate—implement conversation checkpoints that re-ground against source materials.

Problem: AI delivers wrong answers with certainty, eroding customer trust.

Solution: Calibrate confidence expression. Train responses to include appropriate uncertainty language and implement confidence thresholds triggering verification steps.

A: While no probabilistic model is 100% perfect, using RAG and strict guardrails can get you to 99.9% accuracy, which is often higher than human agent consistency.

A: AI chatbots hallucinate because they generate responses through pattern prediction rather than fact retrieval. When training data is incomplete, context is ambiguous, or queries fall outside learned patterns, models generate plausible-sounding but unverified content.

A: With MagicSuite’s optimized infrastructure, the retrieval process adds less than 200ms to the response time—unnoticeable to the end user.

A: Monitor for these warning signs: customer complaints about incorrect information, escalations where agents contradict chatbot responses, feedback mentioning "the bot told me" followed by inaccurate claims, and quality audits revealing responses without source documentation.

A: No. MagicSuite is designed for CX leaders. If you can upload a PDF or paste a URL, you can build a grounded AI.

A: Yes. MagicSuite ensures your data is encrypted and never used to train the base public models of providers like OpenAI or Google.

MagicSuite's MagicTalk platform delivers enterprise-grade hallucination prevention out of the box. Our RAG-first architecture, knowledge grounding engine, and intelligent escalation systems ensure your customers receive accurate, verified responses every time.

Get started with a free accuracy assessment of your current customer service AI, or see how MagicTalk's hallucination prevention works with a personalized demo.

Hanna is an industry trend analyst dedicated to tracking the latest advancements and shifts in the market. With a strong background in research and forecasting, she identifies key patterns and emerging opportunities that drive business growth. Hanna’s work helps organizations stay ahead of the curve by providing data-driven insights into evolving industry landscapes.