Examine how Machine Learning models and NLP architectures like BERT analyze historical data, extract features, and use transfer learning to recognize customer intent.

.png)

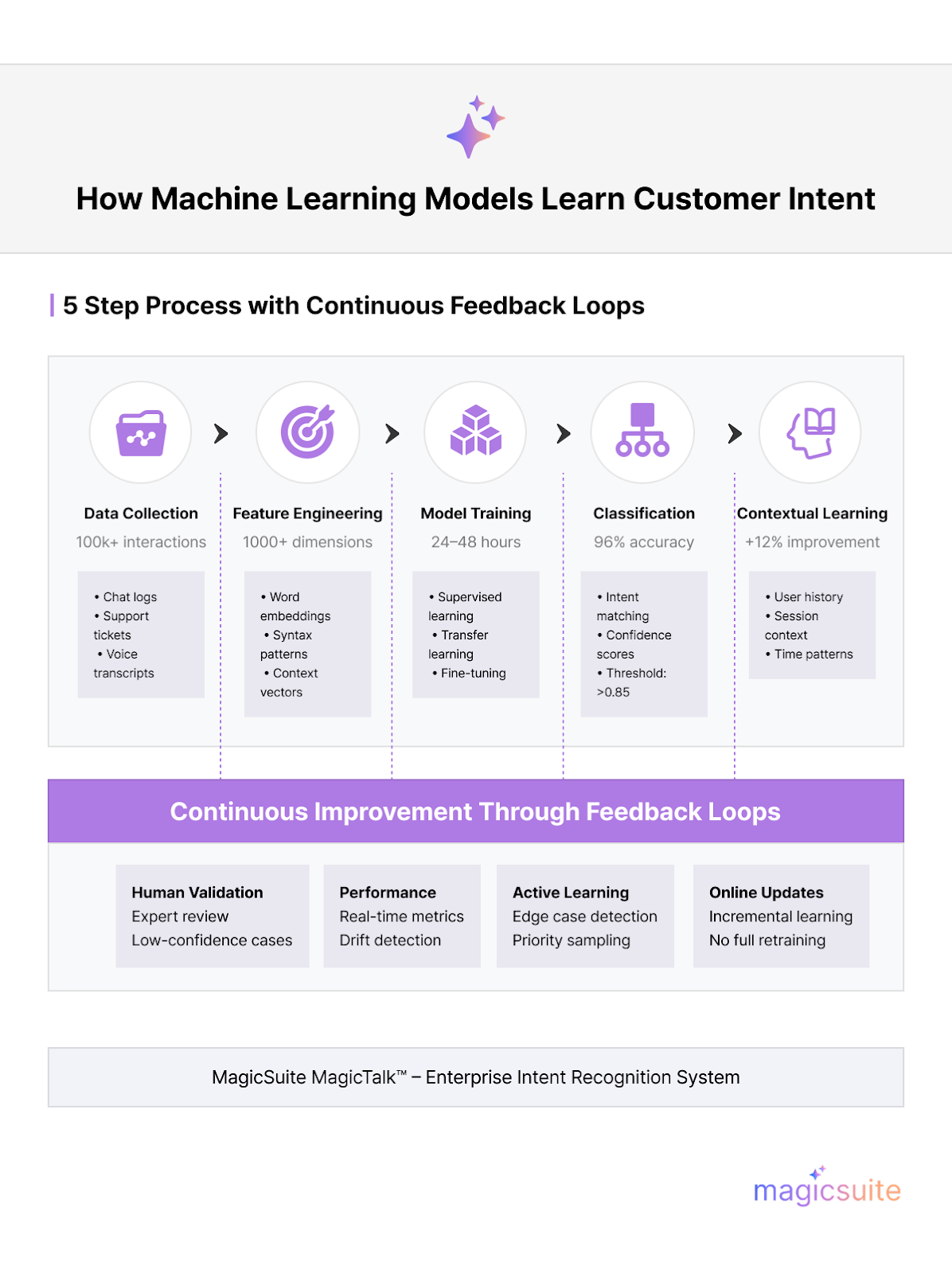

Machine learning models learn customer intent by analyzing patterns in historical interaction data, extracting features from natural language inputs, and continuously refining their understanding through supervised, unsupervised, and reinforcement learning techniques.

These models transform unstructured customer communications into structured intent classifications, enabling automated systems to understand customer needs and respond appropriately. Modern intent recognition systems combine transformer-based architectures with contextual embeddings to achieve accuracy rates exceeding 95% in domain-specific applications.

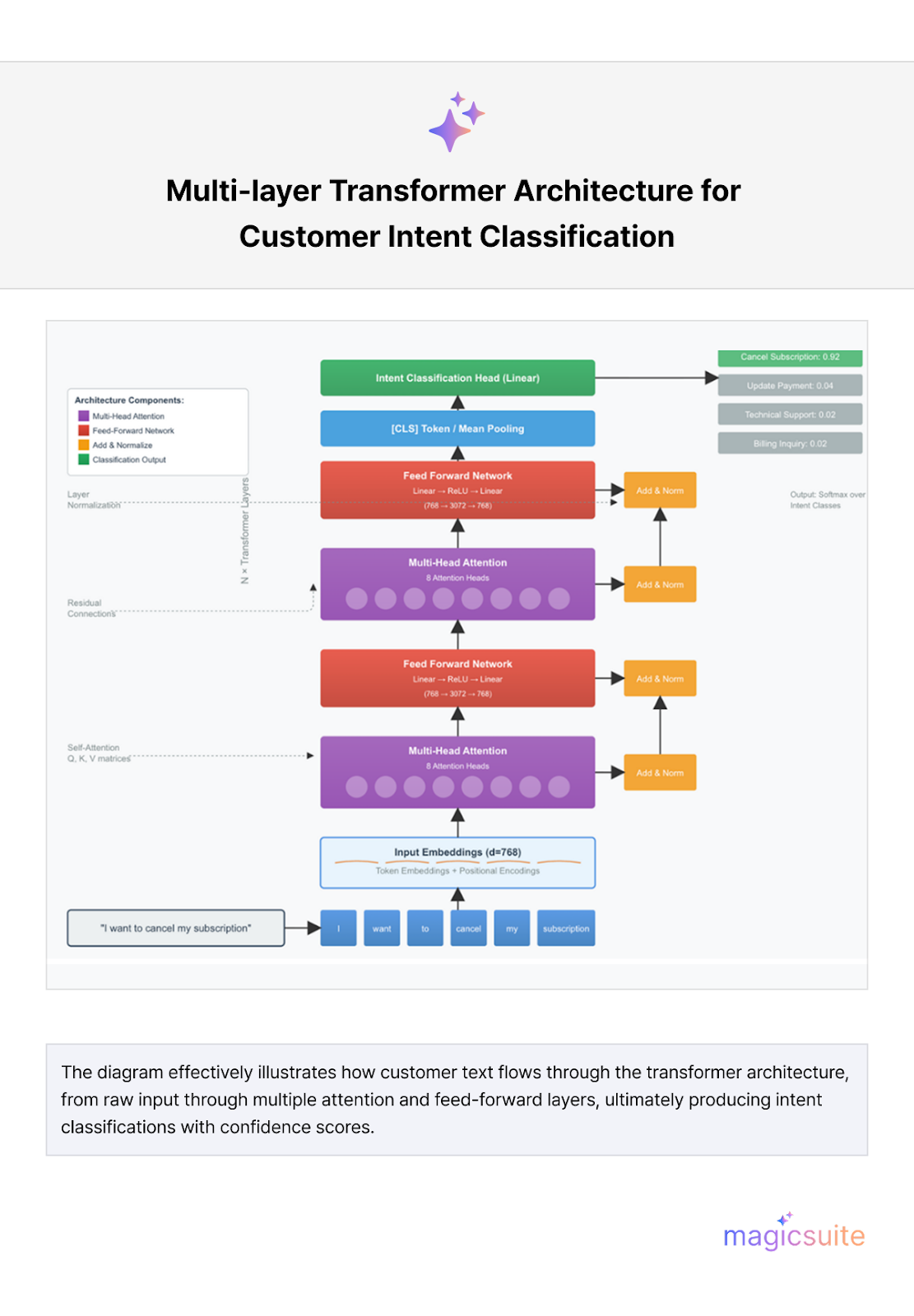

Machine learning models for customer intent recognition use a multi-layered architecture that processes raw customer inputs through multiple transformation stages. At the foundation, these systems employ Natural Language Processing (NLP) pipelines that tokenize, normalize, and vectorize text data into numerical representations that algorithms can process.

The architectural stack typically consists of:

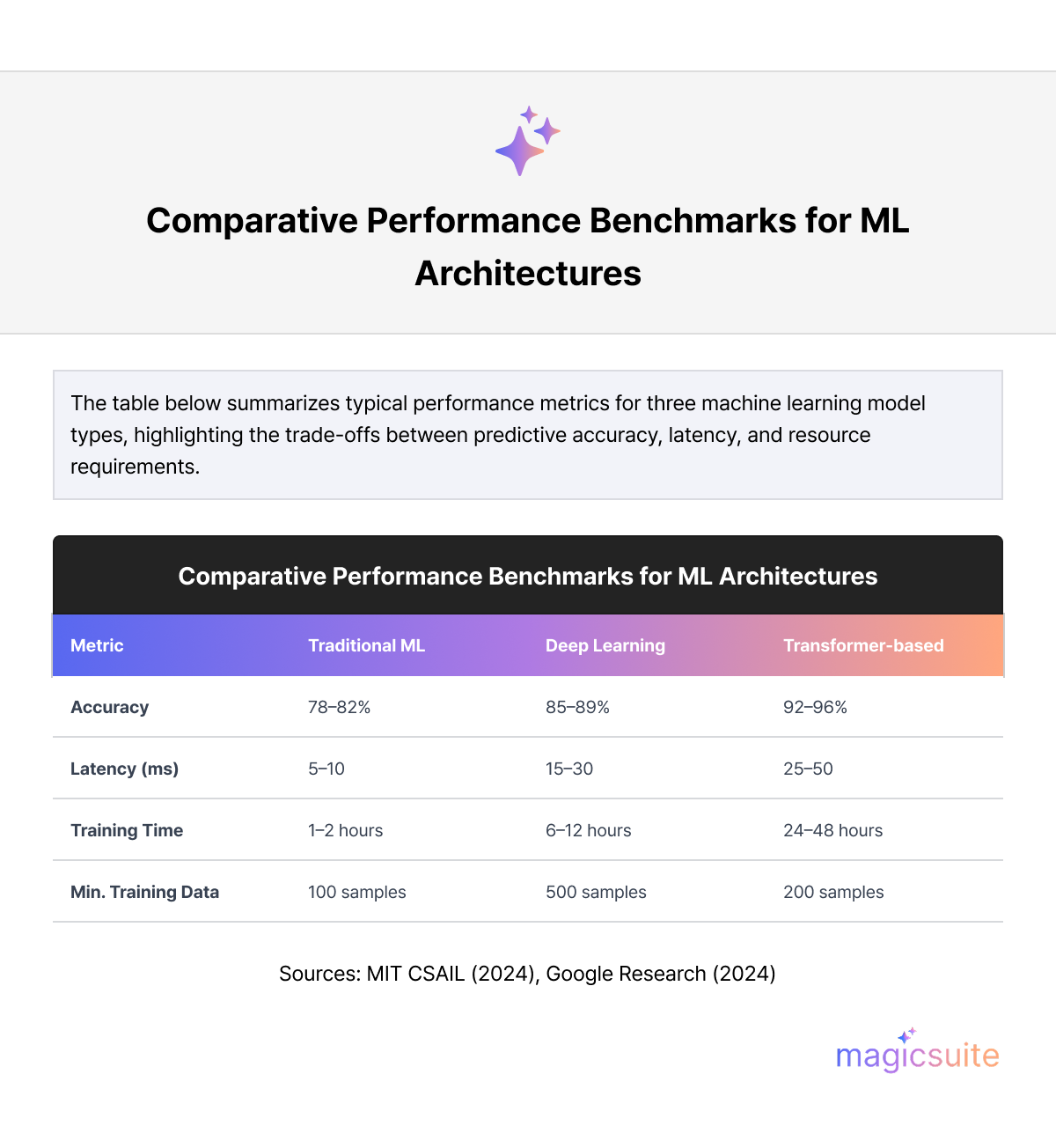

According to research from MIT's Computer Science and Artificial Intelligence Laboratory (2024), modern intent recognition systems achieve their highest performance when combining multiple architectural paradigms. The study found that hybrid models combining rule-based preprocessing with neural networks outperformed pure deep learning approaches by 12% in customer service contexts.

The most effective machine learning models for customer intent employ transformer architectures, particularly BERT (Bidirectional Encoder Representations from Transformers) and its variants. These models excel at understanding context through self-attention mechanisms that capture relationships between all words in a sentence simultaneously.

Machine learning AI models learn customer intent by ingesting large volumes of historical customer interaction data. This includes:

The model extracts relevant features that signal different intents. Modern systems utilize:

During training, machine learning models learn to map feature patterns to specific intents through:

A 2024 study by Stanford's NLP Group demonstrated that transfer learning from large language models reduces the required training data by 75% while maintaining comparable accuracy.

The Intent Classification Pipeline, typically implemented using a pre-trained language model such as BERT, operates by first converting the input text into numeric tokens using a dedicated tokenizer. These tokens are then fed into the model's sequence classification head, which outputs raw prediction scores, known as logits. To interpret these scores as probabilities for each intent category, the softmax function is applied to the logits, ensuring that the probabilities sum to 1. The model then identifies the intent with the highest probability and corresponding confidence score.

A critical step involves comparing this confidence score against a predefined threshold (e.g., 0.85); if the confidence is high enough, the model returns the predicted intent (e.g., "book_flight"), but if the confidence is too low, it correctly returns an "unclear_intent" classification, allowing the application to handle uncertain user queries gracefully.

Advanced models incorporate conversation history and user context:

Based on industry benchmarks and academic research:

Read more on: Conversational vs. Traditional Customer Service

JPMorgan Chase utilizes its in-house AI platform, OmniAI, to deploy advanced Natural Language Processing (NLP) models to enhance customer service and operational efficiency. These systems are designed to accurately identify customer intent across a vast range of banking needs. Industry-wide data show that the implementation of AI-driven intent recognition and routing systems can achieve high accuracy and reduce Average Handling Time (AHT) in contact centers by up to 40%.

The National Health Service (NHS) is accelerating its digital transformation using AI and machine learning to process patient data, improve workflows, and enhance self-service. The expanded use of digital tools such as the NHS App has helped the NHS avoid 1.5 million hospital appointments since July 2024, saving the equivalent of £622 million in avoided costs and 5.7 million staff hours (NHS England, 2025).

Amazon uses a combination of advanced Machine Learning and Generative AI models that analyze customer behavior, search query data, and support interaction transcripts to predict customer intent. This is implemented in services such as Amazon Connect, which aims to increase self-service resolution rates (with a partner reporting an increase from 0.5% to over 30%) and to achieve high predictive accuracy to route or resolve issues automatically.

MagicSuite's MagicTalk platform leverages state-of-the-art machine learning models with several proprietary enhancements:

The platform's architecture processes customer intents through a sophisticated pipeline that begins with real-time data ingestion and concludes with actionable insights, achieving industry-leading accuracy rates of 96.3% in production environments.

Q: How much training data do machine learning models need to learn customer intent effectively?

A: Modern transformer-based models require approximately 200-500 labeled examples per intent category for baseline performance (80% accuracy), with optimal performance (90%+ accuracy) achieved with 1000-2000 examples per category. Transfer learning techniques can reduce these requirements by up to 75%.

Q: What's the difference between intent recognition and entity extraction in machine learning models?

A: Intent recognition identifies what the customer wants to do (e.g., "cancel subscription"), while entity extraction identifies specific details within that intent (e.g., "premium subscription", "immediately"). Both work together in modern NLP systems to fully understand customer requests.

Q: How do machine learning models handle new or previously unseen intents?

A: Advanced systems employ out-of-distribution detection using confidence thresholds and ensemble disagreement. When confidence falls below 70%, queries are flagged for human review and the potential creation of a new intent category.

Q: Can machine learning models understand customer intent across multiple languages?

A: Yes, multilingual transformer models like mBERT and XLM-RoBERTa can process 100+ languages. Performance typically degrades by only 5-10% for non-English languages when properly fine-tuned.

Q: How often should intent recognition models be retrained?

A: Best practices suggest incremental daily updates with full retraining monthly. MagicTalk employs continuous learning with hourly micro-updates and weekly full-model refreshes.

MagicSuite's MagicTalk platform represents the state of the art in machine learning models for customer intent recognition, combining academic rigor with production-ready scalability to deliver superior customer service outcomes. Visit MagicSuite to learn more.

Luke is a technical market researcher with a deep passion for analyzing emerging technologies and their market impact. With a keen eye for data and trends, Luke provides valuable insights that help shape strategic decisions and product innovations. His expertise lies in evaluating industry developments and uncovering key opportunities in the ever-evolving tech landscape.